Neural networks provide a vast array of functionality in the realm of statistical modeling, from data transformation to classification and regression. Unfortunately, due to the computational complexity and generally large magnitude of data involved, the training of so called deep learning models has been historically relegated to only those with considerable computing resources. However with the advancement of GPU computing, and now a large number of easy-to-use frameworks, training such networks is fully accessible to anybody with a simple knowledge of Python and a personal computer. In this post we'll go through the process of training your first neural network in Python using an exceptionally readable framework called Chainer. You can follow along this post through the tutorial here or via the Jupyter Notebook.

- Effect Chainer Movie

- Effect Chain Order

- Effect Chinese

- Effect Chainer Body

- Effect Chainer 2017

- Effect Chainer Vst

We'll start by making one of the simplest neural networks possible, a linear regression. Then we'll move into more complex territory by training a model to classify the MNIST hand written digits dataset (a standard benchmark for deep learning models).

- Free acon effects chainer download software at UpdateStar - A smart and simple webcam application designed to enhance users' video chat experience through frames, effects, and themes.DetailsArcSoft Magic-i Visual Effects is a smart and simple webcam application designed to enhance users' video chat.

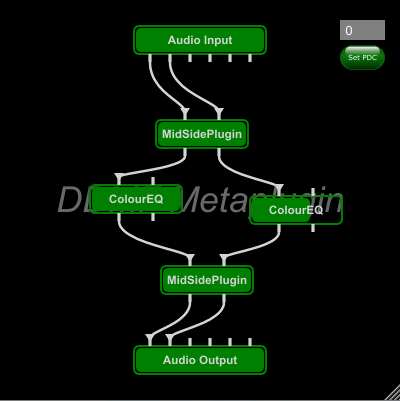

- EffectChainer is a somewhat lightweight solution for all those who are looking to chain and link a small or large number of DirectX and VST plugins in a single rack. The application displays a.

- Working in standalone or as a VST/AU instrument or effect, Freestyle CM – the exclusive Computer Music edition of New Sonic Arts' powerful VST chainer and host – gives you all the features of the full version. It's restricted only in that it works exclusively with our CM Plugins suite of instruments and effects.

Because Chainer can discard the creature we intend to cast from our graveyard, we can also surprise the table with hand disruption with Magus of the Wheel, skillfully timed removal with Massacre Girl, or literally mayhem with Avatar of Slaughter. Another small but relevant theme within the deck: unique enters-the-battlefield effects.

Should you wish to execute the code examples below, you will need to install Chainer, Matplotlib, and NumPy. These are easily installed through the Python package manager, pip.

Make sure to upgrade your version of Cython if you have previously installed it or have it installed via Anaconda. If you don't do this you may receive a cryptic error during the Chainer installation process.

If you have Jupyter installed, then you can just download the notebook structured around this post and run it on your local machine. First clone the stitchfix/Algorithms-Notebooks repository on GitHub and the notebook will be contained in the chainer-blog folder.

To start, we will begin with a discussion of the three basic objects in Chainer, the chainer.Function, chainer.Optimizer and the chainer.Variable.

First we need to import a few things, which will be used throughout the notebook, including Matplotlib, NumPy, and some Chainer specific modules we will cover as we go.

Examining Chainer Variables and Functions

Let's begin by making two Chainer variables, which are just wrapped NumPy arrays, named a and b. We'll make them one value arrays, so that we can do some scalar operations with them.

In Chainer, Variable objects are both part symbolic and part numeric. They contain values in the data attribute, but also information about the chain of operations that has been performed on them. This history is extremely useful when trying to train neural networks because by calling the backward() method on a variable we can perform backpropagation or (reverse-mode) auto-differentiation, which provides our chosen optimizer with all the information needed to successfully update the weights of our neural networks.

This process can happen, because Chainer variables store the sequence of Chainer functions that act upon them and each function contains an analytic expression of its derivative. Some functions you will use will be parametric and contained in chainer.links (imported here as L). These types of functions are the ones whose parameters will be updated with each training iteration of our network. Other functions will be non-parametric, contained in chainer.functions (imported here as F), and merely perform a predefined mathematical operation on a variable. Even the addition and subtraction operations are promoted to Chainer functions and the history of operations of each variable is stored as part of the variable itself. This allows us to calculate derivatives of any variable with respect to any another.

Let's see how this works by example. Below we will:

Inspect the value of our previously defined variables above by using the data attribute.

Backpropagate using the

backward()method on the third variable,c.Inspect the derivatives of the variables stored in the

gradattribute.

Now that we know a little bit about the basics of what Chainer is doing, let's use it to train the most basic possible neural network, a linear regression network. Of course the solution to the least squares optimization involved here can be calculated analytically via the normal equations much more efficiently, but this process will demonstrate the basic components of each network you'll train going forward. Fallout 3 alternate start.

This network has no hidden units and involves just one input node, one output node, and a linear function linking the two of them.

To train our regression we will go through the following steps:

Generate random linearly dependent datasets.

Construct a function to perform a forward pass through the network with a Chainer Link.

Make a function to do the network training.

In general, the structure you'll want to keep common to all neural networks that you make in Chainer involves making a forward function, which takes in your different parametric link functions and runs the data through them all in sequence.

Then writing a train function, which runs that forward pass over your batches of data for a number of full passes through the data called epochs, and then after each forward pass calculates a specified loss/objective function and updates the weights of the network using an optimizer and the gradients calculated through the backward method.

At the start Chainer practitioners often define the structure they'll be working with by specifying the Link layers (here we'll only need one). Then they will specify an optimizer to use, by instantiating one of the optimizer classes. Finally, they'll tell the optimizer to keep track of and update the parameters of the specified model layers by calling the setup method on the optimizer instance with the layer to be tracked as an argument.

Plot Results During Training

The code below will train the model for 5 epochs at a time and plot the line which is given by the current network parameters in the linear link. You can see how the model converges to the correct solution as the line starts out as light blue and moves toward its magenta final state.

BONUS POINTS

If you've been following along doing your own code executions, try turning the above linear regression into a logistic regression. Use the following binary dataset for your dependent variable.

HINT

You'll want to make use of the F.sigmoid() and F.sigmoid_cross_entropy() functions.

For this third and final part we will use the MNIST handwritten digit dataset and try to classify the correct numerical value written in a (28times28) pixel image. This is a classic benchmark for supervised deep learning architectures.

For this problem we will alter our simple linear regressors by now including some hidden linear neural network layers as well as introducing some non-linearity through the use of a non-linear activation function. This type of architecture is called a Multilayer Perceptron (MLP), and has been around for quite some time. Let's see how it performs on the task at hand. I hate quicken for mac.

The following code will take care of the downloading, importing, and structuring of the MNIST set for you. In order for this to work, you'll need to download this data.py file from the Chainer github repository and place it in the root directory of your script/notebook.

Now let's inspect the images to see what they look like.

Finally, we will separate the feature and target datasets and then make a train/test in order to properly validate the model at the end.

With that out of the way, we can focus on training our MLP. Like it's namesake implies, the MLP has a number of different layers and Chainer has a nice way to wrap up the layers of our network so that they can all be bundled together into a single object.

The FunctionSet 1

This handy object takes keyword arguments of named layers in the network so that we can later reference them. It works like this.

Effect Chainer Movie

Then the layers exist as properties of the class instance and all can be simultaneously told to be optimized by feeding the FunctionSet instance to the optimizers setup method:

With that tip, let's move on to structuring our classifier. We need to get from a (28times28) pixel image down to a 10-dimenisional simplex (where all the numbers in the output sum to one). In the output each dimension will represent a probability of the image being a specific digit according to our MLP classifier.

The MLP Structure

To make it simple here, let's start by including only 3 link layers in our network (you should feel free to mess around with this at your leisure later though to see what changes make for better classifier performance).

We'll need a link taking the input images which are (28 times 28=784) down to some other (probably lower) dimension, then a link stepping down the dimension even further, and finally we want to end up stepping down to the 10 dimensions at the end (with the constraint that they sum to 1).

Additionally, since compositions of linear functions are linear and the benefit of deep learning models are their ability to approximate arbitrary nonlinear functions, it wouldn't do us much good to stack repeated linear layers together without adding some nonlinear function to send them through2.

So the forward pass we want will alternate between a linear transformation between data layers and a non-linear activation function. In this way the network can learn non-linear models of the data to (hopefully) better predict the target outcomes. Finally, we'll use a softmaxcross-entropy loss function3 to compare the vector output of the network to the integer target and then backpropagate based on the calculated loss.

Thus the final forward pass structure will look like:

And when it comes time to train our model, with this size of data, we'll want to batch process a number of samples and aggregate their loss collectively before updating the weights.

Define the Model

To start we define the model by declaring the set of links and the optimizer to be used during training.

Construct Training Functions

Effect Chain Order

Now we construct the proper functions to structure the forward pass, define the batch processing for training, and predict the number represented in the MNIST images after training.

Train the Model

We can now train the network (I'd recommend a low number of epochs and a high batch size to start with in order to reduce training time, then these can be altered later to increase validation performance).

Make a Prediction

The last thing we must do is validate the model on our held out set of test images to make sure that the model is not overfitting.

Thus, by simply training for around 5 epochs4 we obtain a model that has an error rate of only 3.4% in testing!

Model Persistence

Should you wish to save your trained model for future use, Chainer's most recent release provides the ability to serialize link elements and optimizer states into an hdf5 format via:

Then we can quickly restore the state of our previous model and resume training from that point by loading the serialized files.

In Chainer, Variable objects are both part symbolic and part numeric. They contain values in the data attribute, but also information about the chain of operations that has been performed on them. This history is extremely useful when trying to train neural networks because by calling the backward() method on a variable we can perform backpropagation or (reverse-mode) auto-differentiation, which provides our chosen optimizer with all the information needed to successfully update the weights of our neural networks.

This process can happen, because Chainer variables store the sequence of Chainer functions that act upon them and each function contains an analytic expression of its derivative. Some functions you will use will be parametric and contained in chainer.links (imported here as L). These types of functions are the ones whose parameters will be updated with each training iteration of our network. Other functions will be non-parametric, contained in chainer.functions (imported here as F), and merely perform a predefined mathematical operation on a variable. Even the addition and subtraction operations are promoted to Chainer functions and the history of operations of each variable is stored as part of the variable itself. This allows us to calculate derivatives of any variable with respect to any another.

Let's see how this works by example. Below we will:

Inspect the value of our previously defined variables above by using the data attribute.

Backpropagate using the

backward()method on the third variable,c.Inspect the derivatives of the variables stored in the

gradattribute.

Now that we know a little bit about the basics of what Chainer is doing, let's use it to train the most basic possible neural network, a linear regression network. Of course the solution to the least squares optimization involved here can be calculated analytically via the normal equations much more efficiently, but this process will demonstrate the basic components of each network you'll train going forward. Fallout 3 alternate start.

This network has no hidden units and involves just one input node, one output node, and a linear function linking the two of them.

To train our regression we will go through the following steps:

Generate random linearly dependent datasets.

Construct a function to perform a forward pass through the network with a Chainer Link.

Make a function to do the network training.

In general, the structure you'll want to keep common to all neural networks that you make in Chainer involves making a forward function, which takes in your different parametric link functions and runs the data through them all in sequence.

Then writing a train function, which runs that forward pass over your batches of data for a number of full passes through the data called epochs, and then after each forward pass calculates a specified loss/objective function and updates the weights of the network using an optimizer and the gradients calculated through the backward method.

At the start Chainer practitioners often define the structure they'll be working with by specifying the Link layers (here we'll only need one). Then they will specify an optimizer to use, by instantiating one of the optimizer classes. Finally, they'll tell the optimizer to keep track of and update the parameters of the specified model layers by calling the setup method on the optimizer instance with the layer to be tracked as an argument.

Plot Results During Training

The code below will train the model for 5 epochs at a time and plot the line which is given by the current network parameters in the linear link. You can see how the model converges to the correct solution as the line starts out as light blue and moves toward its magenta final state.

BONUS POINTS

If you've been following along doing your own code executions, try turning the above linear regression into a logistic regression. Use the following binary dataset for your dependent variable.

HINT

You'll want to make use of the F.sigmoid() and F.sigmoid_cross_entropy() functions.

For this third and final part we will use the MNIST handwritten digit dataset and try to classify the correct numerical value written in a (28times28) pixel image. This is a classic benchmark for supervised deep learning architectures.

For this problem we will alter our simple linear regressors by now including some hidden linear neural network layers as well as introducing some non-linearity through the use of a non-linear activation function. This type of architecture is called a Multilayer Perceptron (MLP), and has been around for quite some time. Let's see how it performs on the task at hand. I hate quicken for mac.

The following code will take care of the downloading, importing, and structuring of the MNIST set for you. In order for this to work, you'll need to download this data.py file from the Chainer github repository and place it in the root directory of your script/notebook.

Now let's inspect the images to see what they look like.

Finally, we will separate the feature and target datasets and then make a train/test in order to properly validate the model at the end.

With that out of the way, we can focus on training our MLP. Like it's namesake implies, the MLP has a number of different layers and Chainer has a nice way to wrap up the layers of our network so that they can all be bundled together into a single object.

The FunctionSet 1

This handy object takes keyword arguments of named layers in the network so that we can later reference them. It works like this.

Effect Chainer Movie

Then the layers exist as properties of the class instance and all can be simultaneously told to be optimized by feeding the FunctionSet instance to the optimizers setup method:

With that tip, let's move on to structuring our classifier. We need to get from a (28times28) pixel image down to a 10-dimenisional simplex (where all the numbers in the output sum to one). In the output each dimension will represent a probability of the image being a specific digit according to our MLP classifier.

The MLP Structure

To make it simple here, let's start by including only 3 link layers in our network (you should feel free to mess around with this at your leisure later though to see what changes make for better classifier performance).

We'll need a link taking the input images which are (28 times 28=784) down to some other (probably lower) dimension, then a link stepping down the dimension even further, and finally we want to end up stepping down to the 10 dimensions at the end (with the constraint that they sum to 1).

Additionally, since compositions of linear functions are linear and the benefit of deep learning models are their ability to approximate arbitrary nonlinear functions, it wouldn't do us much good to stack repeated linear layers together without adding some nonlinear function to send them through2.

So the forward pass we want will alternate between a linear transformation between data layers and a non-linear activation function. In this way the network can learn non-linear models of the data to (hopefully) better predict the target outcomes. Finally, we'll use a softmaxcross-entropy loss function3 to compare the vector output of the network to the integer target and then backpropagate based on the calculated loss.

Thus the final forward pass structure will look like:

And when it comes time to train our model, with this size of data, we'll want to batch process a number of samples and aggregate their loss collectively before updating the weights.

Define the Model

To start we define the model by declaring the set of links and the optimizer to be used during training.

Construct Training Functions

Effect Chain Order

Now we construct the proper functions to structure the forward pass, define the batch processing for training, and predict the number represented in the MNIST images after training.

Train the Model

We can now train the network (I'd recommend a low number of epochs and a high batch size to start with in order to reduce training time, then these can be altered later to increase validation performance).

Make a Prediction

The last thing we must do is validate the model on our held out set of test images to make sure that the model is not overfitting.

Thus, by simply training for around 5 epochs4 we obtain a model that has an error rate of only 3.4% in testing!

Model Persistence

Should you wish to save your trained model for future use, Chainer's most recent release provides the ability to serialize link elements and optimizer states into an hdf5 format via:

Then we can quickly restore the state of our previous model and resume training from that point by loading the serialized files.

Over the course of this post you have hopefully gained an appreciation for the utility of using neural network frameworks like Chainer. It's worth noting that the entire routine we developed for structuring and training a high-performance MNIST classifier with a 3.4% error rate was accomplished in just over 25 lines of code and around 10 seconds of CPU training time, in which it processed a total of 300,000 images. Of course, in my effort to prioritize simplicity I have opted for readability over elegance. Thus, many of the techniques above should be altered in practice beyond the learning stage. The Chainer documentation is rather extensive and covers both best practices and more advanced topics in greater detail, including modularity through object orienting.

1FunctionSet is now depricated in the most recent Chainer release, with the preferred alternative being to make your own class that inherits from the Chainer Chain class. However, in the interest of making this post as simple as possible I've opted to use the older method and wrap the links with FunctionSet. You should refer to Chainer's own tutorial documentation for a more elegant treatment.←

2We'll use rectified linear units for this example due to their computational efficiency and easy backpropagation.←

3This will help impose the constraint that the output values should sum to one.←

4This process takes under 10 seconds on my 3.1 GHz dual-core i7.←

Overview

EffectChainer is a Shareware software in the category Miscellaneous developed by Acon Digital Media GmbH.

The latest version of EffectChainer is 1.2, released on 02/18/2008. It was initially added to our database on 10/29/2007.

EffectChainer runs on the following operating systems: Windows.

Effect Chinese

EffectChainer has not been rated by our users yet.

Effect Chainer Body

Write a review for EffectChainer!

| 12/12/2020 | MindManager_5_033900_0 21.0.261 |

| 12/12/2020 | RazorSQL (OSX) 9.2.7 |

| 12/12/2020 | Fake Voice 7.254 |

| 12/12/2020 | Kate's Video Toolkit 8.254 |

| 12/12/2020 | RazorSQL 9.2.7 |

Effect Chainer 2017

| 12/09/2020 | Updates for Chromium-based browsers available |

| 12/09/2020 | Foxit Reader update availabe |

| 12/08/2020 | Adobe updates Lightroom and Prelude |

| 12/07/2020 | New WinRAR 6.0 available |

| 12/03/2020 | New version of Thunderbird available |

Effect Chainer Vst

- » acon effectchainer

- » effectchainer 2013

- » effect chainer加载 vst3

- » effectchainer 1.2 скачать

- » nu vot

- » effectchainer ダウンロード

- » acon effect chainer win 7

- » acon effectchainer 下载

- » effectchainer インストール方法

- » effectchainer v.1 2